GPT 101 - Part 2 - How Transformers Work

Breaking up and digesting content using the Transformer model architecture

Recap and Introduction to How Transformers Work

In our previous article, “GPT 101 - Part 1” we explored the meaning behind the GPT acronym (Generative Pretrained Transformers), discussed different types of Generative AIs and explained some basic terms. Now, let's dive into how these fascinating AI models actually work.

The Transformer architecture was described first in a 2017 paper titled “Attention is all you need”, written by several researchers, most of them working at Google at the time. (One of those researchers subsequently left Google, and founded a startup called Character.ai, which was recently acquired by…Google).

Unlike the original paper, you don’t have to be an engineer to read the rest of the article (I am not one myself) – we'll use plenty of everyday analogies and examples to make these complex concepts easy to understand!

Breaking Down Language: Tokens and Processing

Processing Tokens

When you input text into a GPT model, it first breaks the text down into smaller pieces called tokens. A token is the smallest unit of text that the GPT model processes and it can actually be a full word, part of a word, or even a punctuation mark. Think of it like a LEGO brick, which you can use as a full piece but also as part of something else you’re building. Each token is then converted into a numerical form that the model can understand.

💡 Analogy: Imagine you're sending a letter to a friend in a different country. Before you send it, you need to translate it into a language they understand. Tokenization is like translating the text into a language the model understands (numbers), so it can process and interpret the information.

The Heart of GPT: The Transformer

At the core of GPT models, there is something called a Transformer. Let's break down its key components:

1. Attention Mechanism

This is the superpower of the Transformer. It allows the model to focus on different parts of the sentence when processing each word or token.

Self-Attention

The model looks at a token and considers its relationship with all other tokens in the sentence. For example, in the sentence "The cat sat on the mat," when processing the word "sat," the model pays attention to "cat" and "mat" to understand the context better.

💡 Analogy: Think of this like reading a book and highlighting important parts as you go. As you read each word, you remember the key details from earlier in the sentence (or even the paragraph) to make sure you understand the full meaning. The self-attention mechanism is like the model's highlighter—it helps it focus on the most relevant words.

2. Layers: Building Understanding Step by Step

Transformers are made up of multiple layers, each consisting of two main parts:

Self-Attention Layers: These layers help the model understand which words (or parts of words) are important in the context of the sentence.

Feed-Forward Layers: After understanding the relationships between tokens, these layers help process and transform this information to generate meaningful outputs.

💡 Analogy: Imagine you're printing an image using offset printing. In this process, each layer of color—cyan, magenta, yellow, and black—is applied separately. At first, each layer might not look like much on its own, but as the layers are combined, they build up to create a full, richly detailed image. Similarly, in a Transformer, each layer adds a new level of understanding. By the time all the layers are combined, the model has a clear and accurate grasp of the text, just like the final printed image is clear and vibrant.

3. Positional Encoding: Keeping Things in Order

Since Transformers process tokens in parallel rather than sequentially, they need a way to understand the order of the tokens. Positional encoding adds information about the position of each token in the sequence, helping the model understand the order and structure of the text.

💡 Analogy: Think of positional encoding like a set of numbered steps in a dance routine. Even though you might practice the steps individually, you need to know the order they go in to perform the full routine correctly. Positional encoding tells the model where each word fits in the overall sequence, ensuring everything stays in the right order.

The Language Map: Embeddings and Vectors

To truly understand how GPTs interpret language, we need to explore two more key concepts: embeddings and vectors.

Vectors: Coordinates in the Language Universe

Vectors are like arrows that point in a specific direction. In AI, a vector is a list of numbers that represents a word, phrase, or sentence. Each word gets its own unique vector, helping the model understand how words relate to one another.

💡 Analogy: Imagine you're playing a treasure hunt game, where each word is like a treasure chest hidden on a map. The vector is like the GPS coordinates that tell you exactly where that chest is located. Even if two treasures are far apart, they could still be on a similar path (or have similar meanings), and the vectors help the model figure that out.

Embeddings: Creating the Language Map

Embeddings are a way to convert words into vectors so that the AI can understand them. They're like special coordinates assigned to each word, placing it on a multi-dimensional map where similar words are closer together.

💡Analogy: Think of embeddings as the process of turning words into puzzle pieces that fit into the big picture of language. When you place "king" on the map, the AI also knows where to place "queen," "prince," and "throne," because they all belong in the same area of the puzzle. This helps the model understand not just the individual words but also how they relate to each other in meaning.

Putting It All Together

A GPT processes input text by breaking it down into tokens, understanding the relationships between these tokens through the attention mechanism, and considering their positions in the sequence. It uses embeddings to place each word on its language map, allowing it to grasp the context and meaning of the text effectively.

Example:

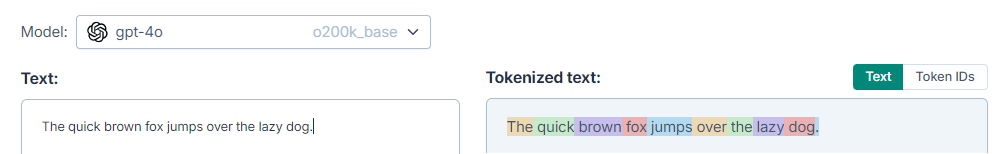

1. Tokenization: The model breaks this sentence into tokens: ["The", "quick", "brown", "fox", "jumps", "over", "the", "lazy", "dog", "."] (but keep in mind that a token may not necessarily be a full word)

2. Embeddings and Vectors: Each token is converted into a vector using embeddings. In our language map analogy, "fox" and "dog" would be placed relatively close to each other in the animal region, while "quick" and "lazy" might be in opposite areas of an adjective region.

3. Positional Encoding: The model adds positional information to each token's vector, ensuring it knows the order of words in the sentence.

4. Self-Attention: For each token, the model looks at all other tokens to understand relationships. When processing "jumps":

It pays high attention to "fox" (subject) and "dog" (object of the preposition "over").

It pays medium attention to "quick" and "lazy" as descriptive elements.

It pays low attention to "the" and "."

5. Transformer Layers: The sentence passes through multiple layers of the Transformer:

In early layers, it might focus on basic grammatical structures and word relationships.

In middle layers, it could start understanding the action (jumping) and its participants (fox and dog).

In later layers, it might grasp nuances like the contrast between "quick" and "lazy".

6. Output: After processing through all layers, the model has a deep understanding of the sentence, including its structure, meaning, and subtle implications.

Summary & Conclusion (for now)

The Transformer model architecture was defined for the first time in a 2017 paper and is at the core of the GPTs. It uses the attention mechanism to detect the relationship between tokens (words, or parts of words), across different layers (which are like takes or contexts of interpretation), and in a specific order (positional encoding).

It then stores these relationships in a numeric way through vectors and embeddings that represent the multidimensional relationships, so that they can be retrieved by a computer. Notice that I am not saying they can be “understood by a computer” so as not to confuse it with our human definition of understanding the meaning of sentences.

That’s all for now. Hope the analogies and examples helped you understand the more technical concepts. In the next installment, we will cover how content is generated by GPTs, in (sometimes overly) creative ways.